Interpret Results (Advanced)

Our advanced articles describe how our features/systems work in detail and will sometimes require knowledge of certain scientific or technical terms.

In order to make good business decisions based on the experiments, we recommend to our game developer community to study multiple aspects of the results of A/B tests. These results include more than just the binary statement of having a clear winner or not. Probability to be the best is the probability that a given variant’s goal metric is the highest among the variants. If this probability for a given variant exceeds a significant threshold, we declare that variant a winner. In case of more than two variants – regardless of having a winner -, we can have one or more variants that are statistically significant improvements over the control variant.

The is captured by the quantity Improvement over control.

In case of binary goal metrics – e.g. conversion, retention day 1, retention day 3, etc. – we use Bayesian inference to identify the conversion rate probability density of each variant. Let’s compute the above-mentioned metrics for the following conversion measurements.

| Variant | Number of users | Converted users |

|---|---|---|

| “Control” | 10530 | 2114 |

| “A” | 10555 | 2137 |

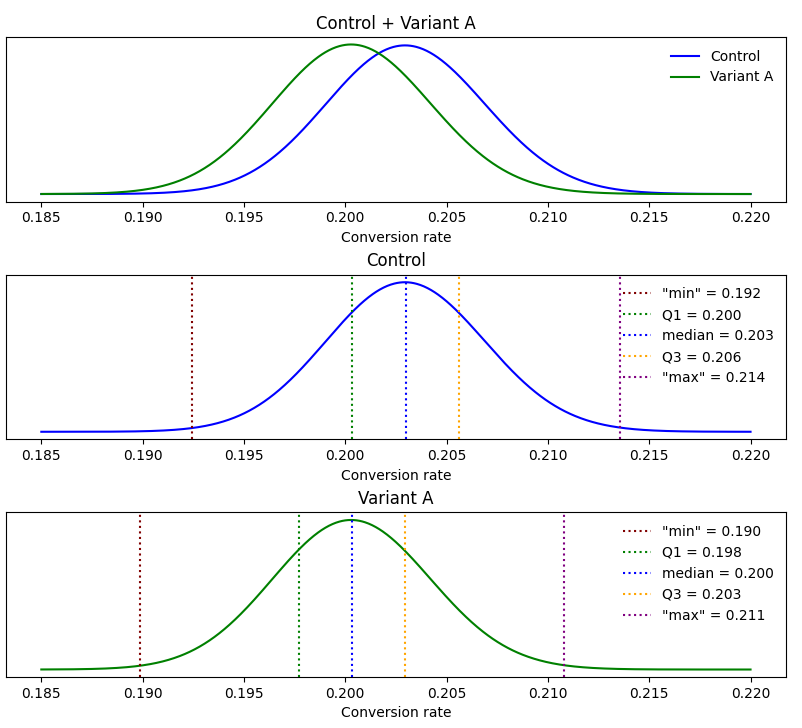

First, we compute the probability densities of the conversion rates.

We use the five-number summary to describe the probability densities of the variants. Q1 is the first quartile, which is the 25% quantile of the data set. Q3 is the third quartile, which is the 75% quantile of the data set. Minimum and Maximum are lower and upper bounds that contain the vast majority of the data set. They are not necessarily equal to the actual minimum and maximum of the data sets.

Then, we draw a high number of samples from both distributions, to compute the Probability to be the best for both variants:

| Probability to be the best | Variant |

|---|---|

| 62% | “Control” |

| 38% | “A” |

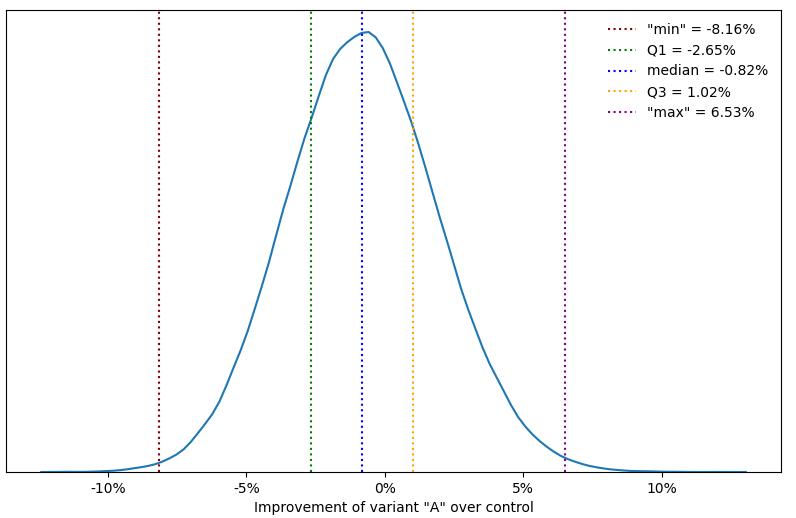

Since none of the variants have a higher probability of being the best performer than 95%, we conclude that there is no clear winner in the A/B test. Next, we compute the Improvement over control (IOC).

In this particular experiment, we can conclude that the change that has been introduced did turn out to be an improvement over the control variant. Rather, we feel that the new variant performed a bit worse than control. It is important to note though, that the analysis did not indicate that there is statistically significant difference between the variants.

To make the most out of A/B tests, we suggest game developers to go beyond the binary statement of having a winner or not, and study every aspect of the results – winning probabilities, the improvement over control statistics, etc.